You hear a lot about Google algorithm updates and may have some trouble keeping them straight. There are over 200 components to Google’s algorithm, and most are not defined publicly. SEOs like to focus on the ones we can control:

- Trust and Authority

- Link Popularity of a Specific Page

- Anchor Text of External and Internal Links

- Title tag and H1 Text

- On-Page Keyword Usage

- Traffic and CTR data

- Social Signals

The most influential Google Algorithm updates are the ones that get special development, names, and public announcement. By publicly announcing the updates, Google signals the market on where content can be improved and rewarded for better user experience.

So what are the important Google algorithm updates that you need to know about? We will go into each in detail, but we will cover the main 5 as they pertain to search engine optimization are Penguin, Panda, Hummingbird, Pigeon and Phantom. These Google algorithm updates all changed the game of SEO strategy in different ways, but they all had one overall goal: to improve users' search experience across the web.

It’s important to understand the implications of Google algorithm updates. Unnatural links or duplicate content can lead to a page or pages on your site getting de-indexed, meaning they won’t show up in the SERPs at all. This is usually followed by a drastic drop in organic traffic to your site.

The What and Why of the Google Update Penguin

Google Penguin targeted sites with spammy backlink profiles. This means that if your site had links pointing to it from sites with low trust or authority, or engaging in link schemes, keyword stuffing, over optimization, and unnatural links, it would get hit by a Penguin penalty. BrightEdge customers can use the Backlinks Report to see the changes over time.

The following rollouts of Penguin Google Algorithm updates expanded Google’s quality standards in reference to backlink profiles, deeming the following as low quality:

- Paid Links

- Sitewide links

- Low Quality Directories

- Spammy Blog and Forum Comments

Preventing and Recovering from the Penguin Penalty

It’s easy to identify sitewide links through Google Webmaster Tools—do you have multiple links from your own website or another that look suspicious? It’s time to reevaluate your linking strategy.

Google Search Console/Webmaster Tools will also tell you if you have already been hit by any Google algorithm updates—there is a section under Manual Actions that will have this information. If you see this, you want to go into recovery mode immediately to regain any lost traffic or rankings.

You can see your entire backlink profile in Webmaster Tools, and you should know what to ask:

- What is the quality of linking sites? Do they look trustworthy?

- How relevant are the links? Do the links listed make sense for your business from an industry or local perspective?

- How quickly were they acquired? Speed of link building is taken into consideration--make sure your links are being built naturally are on a consistent basis.

If you’re in the unfortunate recovery mode, identify the links that are irrelevant, low quality, or against Google’s guidelines. The removal of unnatural links must be attempted manually at first. This is where you have some work ahead of you—you need to reach out to the sites and ask for a removal. In some cases, if the site is unwilling to remove a link, or if your business requires sitewide links (for example, email marketing and newsletter signups tend to employ this strategy) you can move onto Google’s Disavow Tool.

The Disavow Tool allows users to submit a list of URLs they want to remove from their backlink profile if manual removal is unsuccessful. This list tells Google that you are not associating your site with the URLs listed; essentially, you are acknowledging the poor quality links exists and do not want to be penalized for it.

A few notes to consider when you’re reviewing your backlink profile or submitting the Disavow file: it is not a shortcut approach. You have to invest the time by reviewing each page, and it’s often better to disavow at a domain level if you’re unsure about the quality of the link. Remember that if you have a large number of links you’re removing, you’ll need to implement a slow but steady strategy to regain quality, relevant links in their place and avoid any loss of domain authority along the way. Make sure you follow Google’s instructions to the letter—including the setup of your disavow.TXT file for upload and the language of your Reconsideration Request.

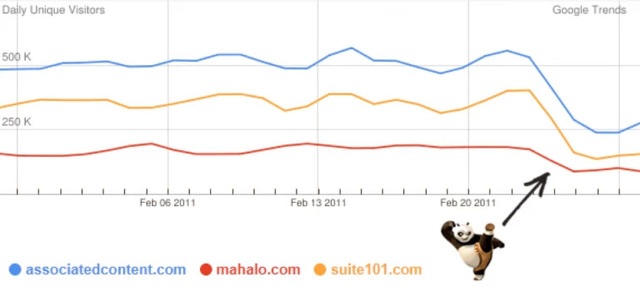

Google Algorithm Updates, Panda’s Attack on Low-Quality Content

The first Google Panda update was designed to reduce organic search rankings for low-quality sites — sites which are low-value for users, copy content from other websites or sites that are just not very useful. At the same time, it provided better rankings for high-quality sites. A little inside-knowledge is that the name Panda came from the Google engineer who designed it, Navneet Panda.

Preventing Panda’s Wrath

Creating unique site experiences that are focused on high-quality user experiences on your site is essential. Think about your end goal—whether it’s sales or education, deliver the content on your site in a way that is helpful to users and unique to your business. BrightEdge users can use multiple capabilities from Keyword Reporting to Page Reporting to Site Recommendations to target content, measure results, and optimize the content.

- Would you trust the information presented in this article?

- Is this article written by an expert?

- Does the site have duplicate articles?

- Are the topics driven by genuine interests of readers of the site?

- Does the page provide substantial value when compared to other pages in search results?

Recovery

If you’ve already been hit by Panda, there are steps you can take to recover. First check your Google Analytics or other reporting tool to see if your organic traffic has dropped significantly. If you’ve seen a substantial drop, check for two things on your site:

- Syndicated content

- Duplicate content

If you do find duplicate content or what we call “doorway pages”—pages with thin content that are necessary within the navigational structure—it’s important to employ the correct technical code to avoid these pages getting indexed as content for the user.

You can employ the use of a robots.txt file on pages that you do not want crawled—it lets the engine know the page shouldn’t be included in your index and is a good solution for standalone pages or landing pages.

If you have an eCommerce site or a deep navigation site with pagination, you want to employ the use of the rel=canonical tag. In this instance, the rel=canonical tag passes ranking power of a page much like a 301 redirect, focusing on the main category page, which generally has the rich content and targeted keywords.

If you are in the clear with duplicate content, you may want to take a deeper dive into your existing pages--primarily top landing reports, engagement and bounce rate reports, and top traffic pages.

Once discovered, you can take steps to improve the content on your site. Write high-quality content targeting more than just terms. Understand your niche, what people are searching for, and provide killer content that deserves to rank highly.

Search-Based Algorithms—for the User

The next two Google Algorithm updates we’re going to touch on are not penalty-based. Instead, they change the way Google delivers search results in general.

As conversational search becomes the norm, Google Hummingbird update lends understanding to the intent and contextual meaning of terms used in a query. With Hummingbird, Google can serve better results for longer-tail queries.

Optimizing for Hummingbird

There are prevention factors to consider with Hummingbird, including the importance of mobile SEO. Conversational search is driven in part by the way people search when on their mobile devices, so mobile optimization is going to continue to be critical.

So how do you tailor your SEO and content marketing strategy to appease Hummingbird? BrightEdge users can use the Data Cube to understand long-tail query opportunities. Otherwise, you’ll want to follow some of these steps:

- Break Down Conversational Queries: Use keywords that sum up longer search terms and use conversational keywords naturally.

- Use Synonymous Search Terms: Optimize for keywords that co-occur in similar contexts.

- Revise Your Anchor Text Strategy: Review your internal linking anchor text and use only relevant keywords.

- Stand Out in Search Results: Check all search results for your key terms—videos, maps, et cetera. Can you create results in these universal search results categories?

Another results-based Google Algorithm update was Google Pigeon, an algorithm change that largely affected local search. We saw 3 major changes in the search engine results for local terms:

- A decrease in the local search radius

- Less duplication of local search results

- Benefit to local search directories

Yelp and other local directory-style sites benefit with higher visibility after the Google Algorithm updates. In the post-Pigeon era, search results that are authoritatively local will be more prominently featured on the SERPs.

There are several steps to check the effects of and adhere to the latest Pigeon update:

- Run a rank tracker that monitors blended rank. One of the key changes is a lower number of queries that include a local listing pack on their SERPs.

- Analyze local competitors. Local rankings will now be more determined by domain authority, backlinks and other SEO rankings factors.

- Check existing business listings. Local potential clients can find you via local directory sites.

- Focus on Reviews. Work on naturally getting reviews from your best customers on all review sites.

If your local rankings decreased after Pigeon, ensure that your local listings are following local best practices:

- The business is submitted to Google Local, Yahoo and Bing and all information is accurate and up-to-date.

- Develop individual local landing pages per location.

- Utilize structured markup on the local landing pages.

- Frequently distribute and manage listings with accurate location information.

- Identify any duplicate or inconsistent listings across the web.

Finding the Phantom in the Google Algorithm Updates

Google’s latest algorithm introduces their quality guidelines into the future of search, particularly serving to underline all past algorithms into one user experience-focused goal.

This algorithm serves to underline Google’s main objective, which is to provide quality and trustworthy content and results. Even without the threat of Phantom looming over your website, it would serve you and your customers to adhere by their guidelines for content creation.

Prevent Phantom penalties by understanding your visitor, what they are searching for, what they are buying, and what they need. Give them that information on your site in a way that is easy to find, easy to read and useful to their end goal.

If you’re seeing a decline in site or page traffic, it’s time to evaluate how your visitors are using your site. Check the traffic flow and exit pages to see where users are dropping off or where you’re appearing in the SERPs. If you’re ranking for a query that isn’t relevant to a page or your site as a whole, create a better page for that topic, or avoid targeting for that query if it’s not consistent with your strategy.

The Google Algorithm Updates Paradox

Phantom and the other Google algorithm updates are paradoxical in nature. The search giant wants you to create content and optimize your site for the user, not for an algorithm. In fact, ignoring the minute details of these algorithms will probably help you succeed even more than employing a powerful recovery strategy: think offense, not defense. If you begin at a user level, not a search-engine level, you will succeed in creating trustworthy content that people find useful, and the rankings and traffic will follow.