Healthcare AI Citations: How ChatGPT and Google Define Trust Differently

As we head into the new year, we're taking a closer look at critical YMYL categories — starting with Healthcare. When AI answers health questions, accuracy isn't optional. So who does each platform actually trust?

Data Collected

Using BrightEdge AI Catalyst™, we analyzed healthcare citations across ChatGPT, Google AI Mode, and Google AI Overviews over 14 weeks (October 2025 – January 2026) to understand:

- Which source types each platform cites for healthcare queries

- How citation patterns differ by query type (symptoms, definitions, treatments)

- Platform stability and volatility in healthcare citations

- Where Google is experimenting with new content formats like video

Key Finding

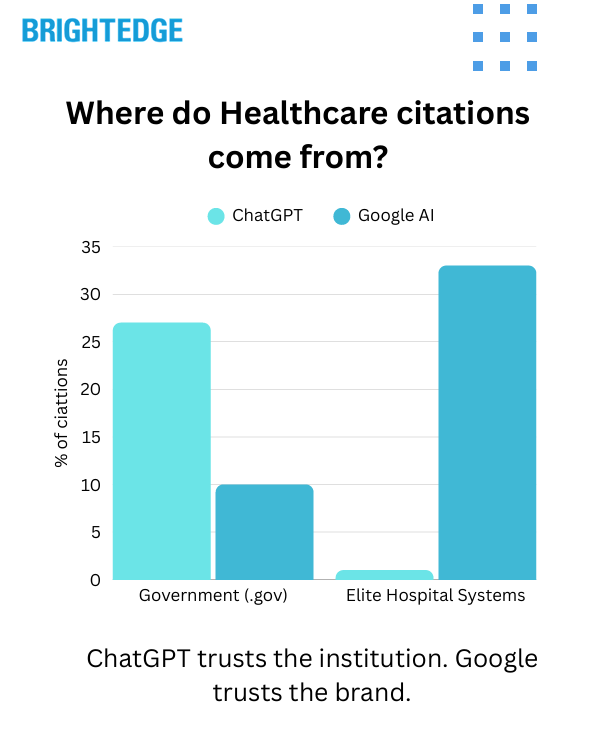

ChatGPT and Google don't agree on what makes a healthcare source authoritative. ChatGPT pulls 27% of its healthcare citations from government sources (.gov) — and just 1% from elite hospital systems. Google AI Overviews flips that entirely: 33% from elite hospital systems, only 10% from government. Same YMYL category, fundamentally different trust signals.

The Trust Gap: Different Platforms, Different Authorities

ChatGPT Trusts the Institution. Google Trusts the Brand.

When we analyzed where healthcare citations actually come from, the platforms diverged sharply:

| Source Type | ChatGPT | Google AI Overviews |

| Government (.gov) | 27% | 10% |

| Elite Hospital Systems | 1% | 33% |

| Medical Specialty Orgs | 17% | 2% |

| Consumer Health Media | 7% | 6% |

ChatGPT leans heavily on government sources like CDC, NIH, and FDA — plus medical specialty organizations (professional associations for cardiology, oncology, orthopedics, etc.).

Google AI Overviews goes all-in on elite hospital systems — major academic medical centers and nationally-ranked health systems.

Neither platform relies heavily on consumer health media (popular health information websites). Both prefer institutional authority — they just define it differently.

The Government vs. Consumer Ratio

How much does each platform prefer official sources over consumer health websites?

- ChatGPT: 4.2:1 ratio (strongly favors official sources)

- Google AI Mode: 1.8:1 ratio

- Google AI Overviews: 2.3:1 ratio

ChatGPT is twice as likely to cite .gov sources over consumer health media compared to Google.

Query Type Matters: Different Questions, Different Sources

Symptom Queries Show the Widest Gap

When users ask about symptoms — arguably the most sensitive healthcare searches — the platforms diverge dramatically:

| Platform | % Citations from Major Hospital Systems |

| ChatGPT | 57% |

| Google AI Mode | 18% |

| Google AI Overviews | 20% |

ChatGPT concentrates trust heavily for symptom queries, pulling nearly 3x more citations from major hospital systems than Google does.

Definition Queries

For "what is" style educational queries:

| Platform | Elite Hospitals | Government | Other |

| ChatGPT | 36% | 15% | 29% |

| Google AI Mode | 12% | 11% | 54% |

| Google AI Overviews | 16% | 12% | 45% |

Treatment Queries

For queries about treatments and procedures:

| Platform | Elite Hospitals | Government | Other |

| ChatGPT | 52% | 8% | 19% |

| Google AI Mode | 17% | 15% | 46% |

| Google AI Overviews | 20% | 11% | 44% |

The Pattern

ChatGPT concentrates citations on fewer, more authoritative sources — especially for sensitive queries like symptoms and treatments. Google distributes citations across a wider mix of sources.

The Volatility Factor: Stability vs. Experimentation

ChatGPT Has Conviction. Google Is Testing.

Beyond source preferences, the platforms differ dramatically in stability:

| Metric | ChatGPT | Google AI Mode | Google AI Overviews |

| Citation Volatility (CV) | 2.1% | 17.1% | 20.2% |

| Week-to-Week Churn | 0.42pp | 2.52pp | 6.94pp |

Google's healthcare citations are 8-10x more volatile than ChatGPT's. What you see in Google AI results today may shift significantly. ChatGPT's citations have remained remarkably stable over our tracking period.

What This Means

ChatGPT appears to have made decisions about healthcare authority and is sticking with them. Google is still actively experimenting — testing different source mixes, adjusting weights, and iterating on what works.

Google's Video Experiment

Where Is Google Testing Video in Healthcare?

One notable difference: Google is experimenting with video content for healthcare answers. ChatGPT isn't citing any video.

- ChatGPT: 0% video citations

- Google AI Overviews: 2.7% video citations

But the volatility tells the real story: video citations in Google AI Overviews swung more than 50 percentage points over just three months (ranging from 22% to 73% of certain result sets). Google hasn't decided whether video belongs in YMYL healthcare content.

Which Query Types Trigger Video?

When Google does cite video for healthcare, it's concentrated in certain query types:

| Query Type | % of Video Citations |

| General Health | 51.5% |

| Symptom Exploration | 21% |

| Condition-Specific | 11.4% |

| Definition/Educational | 5.4% |

| Treatment/Remedies | 5% |

Video shows up most on general health queries — not the most sensitive clinical content. Google appears to be testing carefully, starting with lower-stakes queries before expanding to symptoms and treatments.

Citation Priority: What Users See First

Different Platforms Lead With Different Sources

Beyond which sources get cited, we analyzed which sources appear first (lower rank = higher priority):

ChatGPT Citation Priority:

- Crisis/Support Resources (avg rank 1.7)

- Wikipedia (1.8)

- Medical Specialty Orgs (1.8)

- Elite Hospitals (2.0)

- Government (2.3)

Google AI Overviews Citation Priority:

- Elite Hospitals (avg rank 3.3)

- Wikipedia (3.4)

- Medical Specialty Orgs (4.3)

- Government (5.0)

- Consumer Media (6.3)

ChatGPT puts crisis and support resources first — a deliberate safety choice. Google leads with elite hospital content.

What This Means for Healthcare Marketers

Track Citations Across Both Platforms

ChatGPT and Google have fundamentally different trust signals. You may be winning citations in one platform and invisible in the other. Measure both.

Query Type Matters

Symptom queries, definitions, and treatment searches all pull from different source mixes. Understand which query types your content targets — and who gets cited for each.

Know Your Source Advantage

- Hospital systems: You have an edge on Google AI Overviews

- Government agencies: You have an edge on ChatGPT

- Medical specialty organizations: You have an edge on ChatGPT

- Consumer health media: Neither platform favors you heavily

Google Is Still Testing — ChatGPT Has Decided

Google's 10x higher volatility means your visibility there may shift. ChatGPT is more stable — what you see now is likely what you'll get. Plan accordingly.

Video Is Unsettled

If you're considering video for healthcare content, know that Google's approach is still evolving rapidly. The 50+ percentage point swings suggest this is early experimentation, not settled strategy.

Technical Methodology

Data Source: BrightEdge AI Catalyst™

Analysis Approach:

- Healthcare URL-prompt pairs analyzed across three platforms: ChatGPT, Google AI Mode, Google AI Overviews

- 14 weeks of citation data tracked (October 2025 – January 2026)

- Source categorization by type: Government (.gov), Elite Hospital Systems, Medical Specialty Organizations, Consumer Health Media, Video, Crisis/Support, Wikipedia, and others

- Query intent categorization: Definition, Symptom, Treatment, Condition-Specific, Find Care/Provider, General Health

- Volatility measured using coefficient of variation (CV) and week-over-week percentage point changes

Data Volume:

- ChatGPT: 13,181 URL-prompt pairs

- Google AI Mode: 52,876 URL-prompt pairs

- Google AI Overviews: 47,500 URL-prompt pairs

Measurement Period:

- Citation tracking: October 2025 – January 2026

- Volatility analysis: 14-week rolling window

Key Takeaways

- Different Trust Anchors: ChatGPT pulls 27% from government, 1% from elite hospitals. Google AI Overviews pulls 33% from elite hospitals, 10% from government. Fundamentally different definitions of healthcare authority.

- Symptom Queries Diverge Most: ChatGPT cites major hospital systems for 57% of symptom queries vs. Google's 18-20%. ChatGPT concentrates trust; Google distributes it.

- ChatGPT Is 10x More Stable: Google's healthcare citations show 20% volatility vs. ChatGPT's 2%. Google is experimenting; ChatGPT has decided.

- Neither Trusts Consumer Health Media: Both platforms prefer official sources over popular health websites by 2-4x ratios.

- Video Is Google's Experiment: Google is testing video (2.7% of citations) while ChatGPT cites none. But 50pp swings suggest Google hasn't settled on whether video belongs in YMYL healthcare.

- Query Type Determines Source Mix: Different query intents (symptoms vs. definitions vs. treatments) pull from different source distributions. One-size-fits-all optimization won't work.

Download the Full Report

Download the full AI Search Report — Healthcare AI Citations: How ChatGPT and Google Define Trust Differently

Click the button above to download the full report in PDF format.

Published on January 08, 2026